ChatGPT Health Is Here: How Responsible AI Can Unlock Patient Engagement and Clinical Efficiency

January 2026 is a watershed moment for digital health: OpenAI introduced ChatGPT Health, a dedicated, privacy‑segmented space that lets people connect medical records and wellness apps to get context‑aware guidance—explicitly designed to support, not replace, clinical care. At the same time, OpenAI launched ChatGPT for Healthcare, a HIPAA‑ready workspace now rolling out at leading systems like Cedars‑Sinai, Boston Children’s, AdventHealth, HCA, Baylor Scott & White, Stanford Medicine Children’s Health, Memorial Sloan Kettering, and UCSF.

For healthcare marketers, clinical leaders, and IT strategists, this signals a shift from pilots to enterprise‑grade AI in daily workflows. Below, we unpack what’s new, why it matters, and how to deploy responsibly—grounded in the latest data and peer examples.

The Demand Signal: 40M Daily Health Conversations

OpenAI’s January 2026 analysis shows more than 40 million people now ask ChatGPT a health question every day—over 5% of all global messages—with one in four of its ~800 million weekly users submitting health prompts weekly. The majority of U.S. health chats happen outside clinic hours, and 1.6–1.9 million weekly messages focus on insurance navigation (plan comparisons, claims, billing, cost sharing). In rural “hospital deserts,” ChatGPT averages ~580,000 health messages per week, suggesting AI is already filling access gaps in low‑density regions.

Implication for marketers: Patient journeys increasingly begin in AI chat—after hours, in underserved areas, and around benefits and billing. Your brand’s messaging, content, and care-navigation experiences need to be AI‑ready (structured, clear, safety‑minded).

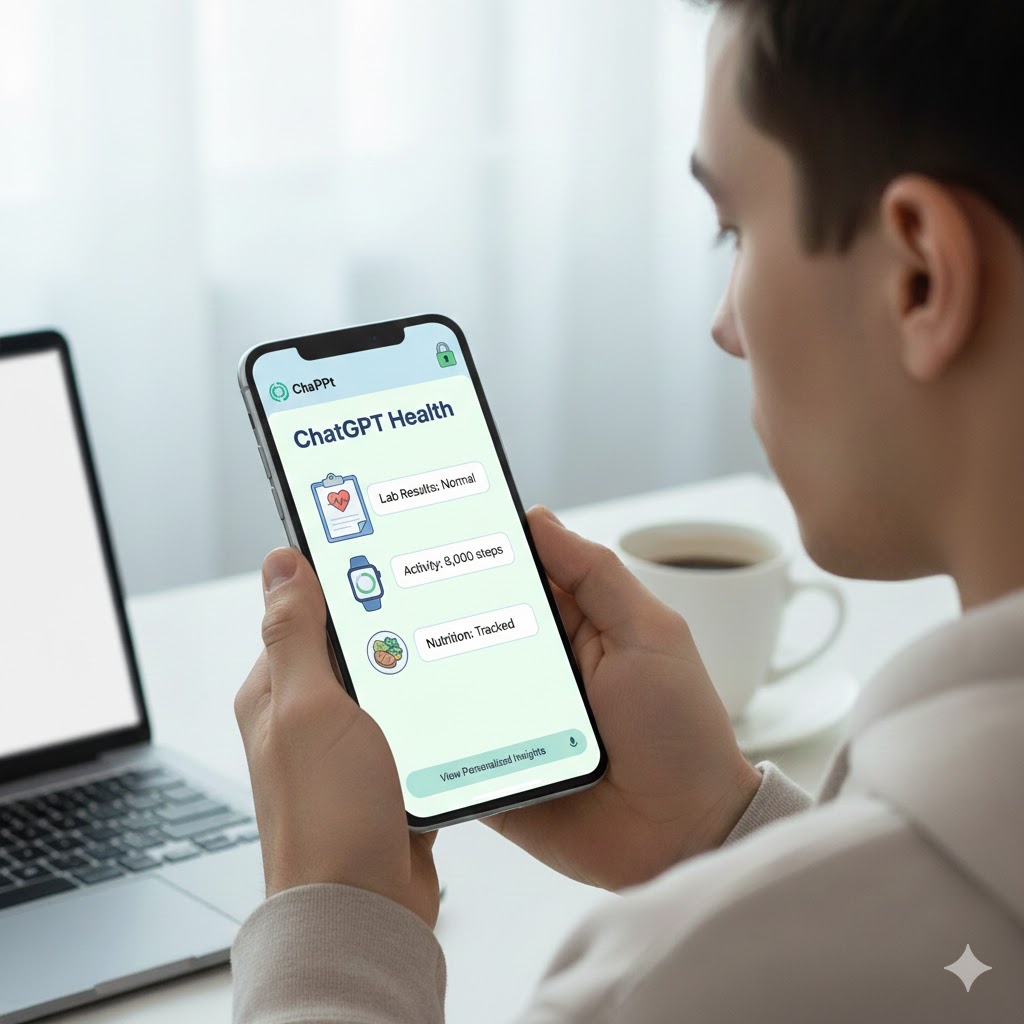

What ChatGPT Health Adds (Consumer Side)

Dedicated health tab & sandboxed privacy. ChatGPT Health operates in an isolated, encrypted space with separate memory; conversations in Health do not train foundation models. Users can securely connect records via b.well and link Apple Health, MyFitnessPal, Function, WeightWatchers, and others—grounding responses in the person’s own data (e.g., summarizing lab results, preparing questions for a visit, spotting trends). Access is rolling out via waitlist to U.S. users on web and iOS, initially excluding the EU, UK, and Switzerland.

Clinically informed design. OpenAI says the system was developed over two years with 260+ physicians and ~600,000 evaluation points across safety, clarity, and escalation behaviors (e.g., when to urge urgent care)

Important guardrails. OpenAI emphasizes Health is not for diagnosis or treatment; it is a navigation aid and comprehension layer (labs, instructions, insurance trade‑offs).

Marketer takeaway: This creates new earned-media and service content opportunities (patient education, pre‑visit prep, benefits literacy) while insisting on clear disclaimers and clinical escalation cues.

What ChatGPT for Healthcare Adds (Enterprise Side)

HIPAA‑ready workspace with governance. ChatGPT for Healthcare supports RBAC, SAML SSO/SCIM, audit logs, data residency, customer‑managed keys, and Business Associate Agreements—with a commitment that enterprise content is not used to trainmodels. Responses can pull evidence with citations from peer‑reviewed studies and guidelines, and integrate with SharePoint/Teams/Outlook so outputs reflect institutional policies and care pathways.

Early adopters (signal, not proof). Major systems are rolling out the workspace to reduce admins’ burden, augment clinical reasoning, and create patient‑ready materials (discharge instructions, prior auth letters, clinical letters) with templates built for real workflows. OpenAI’s product post lists these early users and positions the suite as an enterprise foundation for regulated environments.

Marketer takeaway: Enterprise AI is evolving from “innovation labs” to governed platforms embedded in standard workflows. Messaging must evolve beyond “AI pilots” to value at scale: reduced friction, consistent patient communications, and measurable throughput gains.

Case Signals & Emerging Use Cases

- Boston Children’s Hospital has publicly described hospital‑wide, HIPAA‑compliant GPT deployments and governance over the past year, including staff enablement and AI for documentation and operations—an exemplar of institutional change management.

- Peer‑reviewed work shows ChatGPT can structure discharge summaries and synthesize long patient narratives—with explicit caveats that clinicians must verify outputs. This supports time savings in documentation while keeping the clinician in control.

- Children’s hospitals report AI use cases from internal chatbots to error detection in notes and coding audit improvements—pointing to operational lift and safety guardrails in pediatric settings.

Marketer takeaway: Showcase specific, governed workflows (e.g., prior auth drafting, discharge education, benefits guidance) rather than generic “AI disruption.” Evidence and governance are differentiators.

The Privacy Conversation You Must Lead

HIPAA nuance. Consumer use of AI chat (e.g., in the Health tab) may fall outside HIPAA unless a covered entity is involved via a BAA; this heightens the importance of clear consent, data isolation, and deletion controls. Even with enhanced privacy in ChatGPT Health, experts warn to treat uploads conservatively and understand where HIPAA applies—and where it does not.

Regulatory attention. U.S. regulators (FTC) have sought documentation from chatbot providers on safety and data practices—especially for minors—underscoring the need for transparent governance in consumer‑facing health experiences.

Marketer takeaway: Lead with privacy‑first narratives—isolation of health data, no training on health chats, and enterprise BAAs—while educating audiences on appropriate use and clinical escalation.

Strategy Playbook: From Pilot to Proof to Scale

- Map high‑friction journeys. Start in benefits & billing literacy, pre‑visit preparation, and discharge education—areas with measurable throughput and satisfaction impact and lower clinical risk. (Aligns with OpenAI’s evidence‑backed, template‑ready workflows.)

- Embed governance and brand tone. Use enterprise connectors (e.g., SharePoint) and RBAC so outputs reflect approved policies, FAQs, and style guides; tighten prompts and templates to preserve organizational voice.

- Instrument outcomes. Track time‑to‑draft for prior auth letters, call‑deflection from benefits questions, MyChart message clarity, and post‑discharge comprehension—then ladder those gains to brand trust metrics. (Early adopters emphasize reduced administrative work and improved reasoning throughput.)

- Publish a safety rubric. State plainly: no diagnosis, no treatment advice, clear escalation triggers (e.g., chest pain, neuro symptoms), and human‑in‑the‑loop review for patient‑facing clinical content. (Consistent with Health’s design and mainstream coverage.)

- Educate on privacy boundaries. Explain HIPAA vs. consumer chat, BAA coverage for enterprise use, and data deletion options—backed by the Help Center’s no‑training policy for enterprise data.

Visuals You Can Include (with Suggested Captions)

Figure 1 — The AI‑Ready Patient Journey

A flowchart showing pre‑visit questions (symptoms, labs, benefits), in‑visit support (clarifying instructions), and post‑visit comprehension (discharge, follow‑ups), with escalation gates to clinical care. (Grounded by Health’s “support, not diagnose” remit.)

Figure 2 — Privacy Segmentation

A diagram illustrating ChatGPT Health’s separate space, isolated memory, and no‑training policy for health conversations; next to enterprise RBAC/BAA stack for ChatGPT for Healthcare.

Figure 3 — Where the Volume Already Is

Bar chart of 40M daily health chats, 1.6–1.9M insurance messages/week, 70% after‑hours, ~580k/week in hospital deserts—annotated with marketing implications.

Figure 4 — Workflow Templates

Screenshot mockups (or sample layouts) for prior authorization letters, patient instructions, and discharge summaries within a governed workspace.

Messaging Frameworks for Healthcare Marketers

- Trust by Design: “Our AI experiences operate in a separate, encrypted health space; enterprise use is HIPAA‑ready with BAA and no training on your data.

- Care, Not Cure: “AI organizes information and prepares you for better conversations—it does not diagnose or treat.”

- Evidence + Efficiency: “Clinicians get citations and templates for real tasks, reducing admin burden and improving consistency.”

- Access Equity: “We’re meeting patients after hours and in hospital deserts with clear, safe guidance that connects them to the right level of care.”

Balanced Risks—and How to Address Them

Mainstream coverage raises privacy and safety concerns: HIPAA doesn’t govern all consumer use; AI can err or hallucinate, and guardrails must be maintained—especially in mental health or high‑stakes scenarios. Adopt conservative disclosures, escalation prompts, human review for clinical content, and a clear incident response plan (e.g., content take‑downs, model behavior audits), aligned to enterprise governance. [cnet.com], [time.com] [help.openai.com]

What “Good” Looks Like in Q1–Q2 2026

- Pilot 3 high‑ROI workflows (benefits literacy chatbot; discharge instruction drafting; prior‑auth letters) with measured baselines.

- Stand up a cross‑functional AI council (Compliance, Privacy, Clinical, Marketing) and publish a public AI use statement and patient safety rubric.

- Activate content for ChatGPT Health audiences: lab‑result explainers, insurance explainer series, post‑visit checklists—all with escalation tags and accessibility review.

- Educate providers and contact center teams on how to respond when patients arrive with AI‑generated summaries—and how to incorporate verified outputs into care.

Conclusion: Responsible AI Is a Brand Strategy

ChatGPT Health and ChatGPT for Healthcare are mirrors of patient behavior—meeting rising demand for clarity, access, and navigation—while bringing governance and evidence to enterprise workflows. For healthcare marketers, the win is two‑fold: trust‑building with privacy‑first communication and value‑proof via measurable efficiency and clearer patient education.

The question is no longer if AI belongs in your health experience—it’s how responsibly, and how soon.

Ad Choices

Ad Choices